Everyone Except Me is Wrong About AI

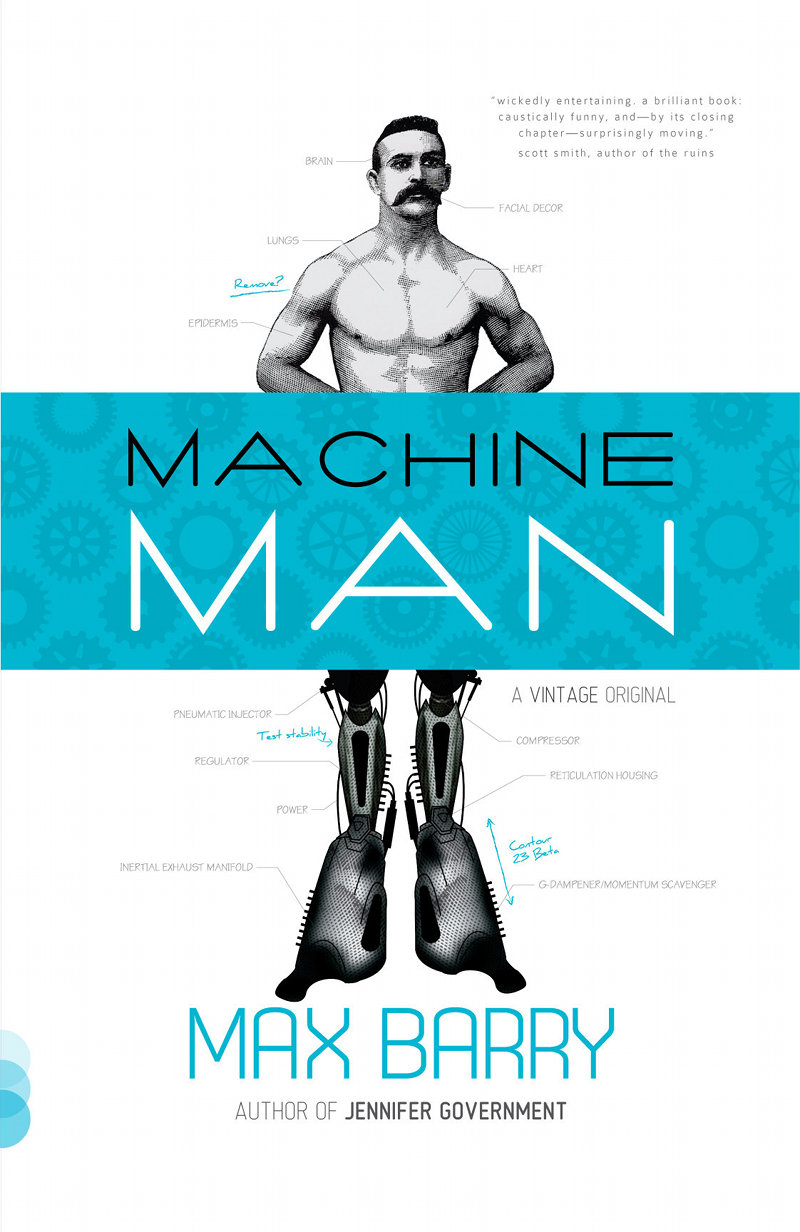

![]() I wrote about AI already, but that was about how

we’re all going to die.

Since then, the conversation has become more nuanced. Now I’m encountering

more subtle ideas I think are totally wrong. So because I know better, here’s why.

I wrote about AI already, but that was about how

we’re all going to die.

Since then, the conversation has become more nuanced. Now I’m encountering

more subtle ideas I think are totally wrong. So because I know better, here’s why.

“AI is already here.”

ChatBots are good at figuring out what comes next when you start a sentence with, “The capitol of Antigua is…” That’s pretty cool. We didn’t have that before. But it’s not intelligence. It’s almost the opposite of intelligence, like the difference between the kid in high school who was always studying and that guy who never studied but could talk and is now a real estate agent. Both can sound smart but only one knows what he’s talking about.

BY THE WAY, it’s very on-brand for Earth 2023 that our robots are designed to sound plausible rather than be correct. Remember in Star Wars how C-3PO delivered a precise survival probability of flying into an asteroid field? (3720 to 1.) And Han Solo was like, “Shut up, C-3PO,” because he was too cool and handsome to be bothered by math. OR SO WE THOUGHT, because that was the kind of AI we were imagining in the 1980s: AI that was, before anything else, correct.

But if C-3PO was a ChatBot, no wonder Han had no time for his bullshit. All C-3PO could do was regurgitate what other people tended to say about surviving asteroid fields, on average.

“AI is almost here.”

Sure, ChatBots have their flaws, like asserting gross fabrications with confidence, but look at the rate of progress! Check out how Stable Diffusion can produce high-quality images in seconds by quietly aggregating decades of work by uncredited artists! It’s not perfect, but imagine where we’ll be in a few years!

I will concede that AI has made tremendous progress in these two critical areas:

- pretending to know what it’s talking about

- stealing from artists

I’m not contesting that. But I don’t agree that honing these skills will lead to genuine AI, of the C-3PO variety, which is basically a person, only artificial. To get that, we need AI that can perceive things, and form an internal model of reality, and use it to make predictions. If instead it’s only good at imitating what everyone else does, that’s not really AI. It’s just statistics.

“AI is just statistics.”

So, yes, everyone realized that if you call your 18-line Python program an “AI,” it gets more interest. Now when someone says “AI,” they might mean C-3PO, or ChatGPT, or just a plain old computer program that until six months ago was a utility or model or algorithm.

When we mean C-3PO, we should probably say “AGI” (artificial general intelligence), or “strong AI,” but nobody likes redefining terms just because they’ve been appropriated, so we don’t. We do believe, though, that there’s a big difference between an AI that is self-aware, has a mental model of reality, and can fall in love, and an AI that auto-aggregates blog posts. We only feel bad about turning off the first one.

However, even the C-3PO type of AI will undoubtedly be “just statistics.” The problem with “it’s just statistics” is the “just.” It implies that statistics can never lead to anything life-like. And that truly intelligent, conscious creatures like us possess something entirely separate and perhaps magical, which nobody is likely to engineer anytime soon.

This is a dumb comfort thought. Chickens are just beak and feathers. Trees are just wood and leaves. Humans are just food and chemistry. We can dismiss anything like that. The universe doesn’t care what you’re made out of.

“AI is not already here.”

We seem to think there’s a line, to which we’re creeping toward with AI that’s increasingly sophisticated, until suddenly: Eureka! It has gained anima, a soul, consciousness, some special quality that we will admit to sharing with it. And then we have AI citizens, who should probably have rights, and not be property.

So we try to guess when this line might be crossed—next year, twenty years, a hundred years, never? We eye each AI iteration, considering how human-like it is, whether it has finally gained the necessary soul/anima/consciousness/je ne sais quoi. But there is no line. There’s no binary yes/no. There wasn’t when life emerged from the primordial soup, or became intelligent, or recognizably human.

AI will never gain the special magical quality that makes us truly intelligent beings, because we don’t have it, either. We’re wasting our time when we try to figure out how human-like the machines are; we should examine how machine-like we already are.

Because we’re predictable as heck. We develop mechanical faults. The Wikipedia page on free will is 16,000 words long and both-sides it.*

We are creatures of chemistry and biology. They might be probability and statistics. Potato, potato. There’s life all around us, of varying shades; intelligence of all kinds. We live in a universe that isn’t picky about what you’re made of. We’re here now, but so are they.

Bonus ideas:

-

The Alignment Problem

This is the idea that the real problem with AI is figuring out how to make it do what we want but without the part where it destroys humanity because it didn’t realize that when we asked for paperclips, we meant without plundering the Earth’s core. Okay, sure. That’s a good first step. But aligning it with human morality only helps so long as there aren’t humans who want to plunder Earth’s core, too. And there are. Also there are humans who don’t want to plunder Earth’s core, necessarily, but do want to have a job and get paid, and capitalism is awesome at packaging those people up into core-plundering machines.

AI will be good unless we make it bad, so let’s just not do that

This one speaks to a pervasive failing on the part of smart people, which is the belief that once they figure out a solution, they’ve solved the problem. But we figured out how to avoid catastrophic climate change decades ago; we’re just not doing it. There is no “we.” “We” can’t decide anything. “You” can just not build bad AI. You can’t stop me from doing it.

Stochastic Road Murder

The free market is great and all, but I do have an issue with this part, where companies promote 2.5-ton urban assault vehicles to people who can be talked into dropping $100,000 by telling them it’s big.

That’s the tag line on a billboard I passed on Sunday, my daughter in the car, the L plates up, as she learns to drive. “IT’S BIG,” says the billboard, that’s the whole tagline, and the Ford F150 is all grille, as seen from the perspective of someone small who’s about to go under the wheels.

Not that the tray is big, or the mileage is big, oh no! Those would be rational arguments, and it’s all emotional appeals for these cars, like “EATS OTHER CARS FOR BREAKFAST,” that’s another one.

I’m a very reasonable person, so I don’t want to ban big cars. I just think we should start jailing marketing people who decide the target market for steroid trucks is irrational people. Don’t get me wrong. I’m not saying the marketing people are personally running down kids in the streets. They just may as well be. Either click Send on your creatives, or trot on down to street level and take a baseball bat to a pedestrian; either way, you’re going to cause a predictable level of harm.

It’s simple economics: Capitalism demands that we jail those marketers. It’s not a morality issue. Maybe you’re fine with a few broken bodies in the service of letting fragile men feel alpha, and, well, okay, but the free market demands we correctly allocate costs to those who produce them. So if we’re rewarding marketers with bags of cash for putting murder cars in the hands of the people we absolutely least want to have murder cars, we must also present them with the invoice for the ensuing pedestrian bodies.

It’s about setting correct market incentives. You wouldn’t even have to jail that many marketing people. Well, maybe you would. To send a message. But I think even a few marketing people in jail, or, you know, heavily fined, or publicly humiliated, all those are good, would be enough to insert a little pause into a marketing exec’s thoughts. Just a little pause, right after: “I love the simple emotive pull of this ‘LEAVES OTHER ROAD USERS FOR DEAD’ campaign, that’ll speak clearly to dudes who perceive lane changes as personal attacks.” Let’s see where that pause gets us.

End of the World, with Terminators

![]() So this AI business, huh, this is getting some traction. It’s evolving so fast, just the other day I had to go back and

take out all the parts in the book I’m working on that carefully establish the plausibility of competent AI in the near future.

Luckily I’m familiar with topics that become exponentially more absurd while you’re writing about them, because I got started in political satire.

So this AI business, huh, this is getting some traction. It’s evolving so fast, just the other day I had to go back and

take out all the parts in the book I’m working on that carefully establish the plausibility of competent AI in the near future.

Luckily I’m familiar with topics that become exponentially more absurd while you’re writing about them, because I got started in political satire.

People wonder if AI will destroy us all, and please, don’t worry, because of course it will and there’s nothing you can do about it. Honestly, people are asking the wrong question with AI. The question isn’t whether it will destroy us but how.

And people have the wrong idea about that, too, from sci-fi stories and Terminator movies where it’s humans versus machines. You wish. That would be great. Imagine the solidarity in a noble fight for the future of the species.

But no, no, it will be more like Elon Musk has a Terminator, and Apple has ten Terminators, and the US Government has some

Terminators but they don’t work properly and are under investigation. Also Democrats have their own Terminators and so do the

Republicans and Rupert Murdoch and everyone, basically, with money to spend and influence to accumulate.

But no, no, it will be more like Elon Musk has a Terminator, and Apple has ten Terminators, and the US Government has some

Terminators but they don’t work properly and are under investigation. Also Democrats have their own Terminators and so do the

Republicans and Rupert Murdoch and everyone, basically, with money to spend and influence to accumulate.

You don’t have a Terminator. You can, like, rent five percent of a Terminator to help do your taxes.

But everyone else, everyone up there, has Terminators. And they fight, but not each other, because that’s risky: a Terminator going head to head with another Terminator. You don’t do that unless you’re sure your Terminator will win. Smarter is deploying your Terminator to acquire more power and wealth from people who don’t have Terminators. Then you can afford more Terminators.

So this is scams run by Terminators, right, you see how filled up the world has become with scams, well, imagine those scams but now they’re created by something smarter than you. They look and sound authentic, they know how persuasion works better than you do, and now there are masses of people sending money and voting based on something that isn’t even real. I mean, that’s today, right, so add Terminators and multiply.

We’ve connected the world and opened windows to its every corner and you know what, people are still people, jammed full of flaws, believing anything that tickles the cortex. We have good people at the top, but also people who don’t give a damn about anyone outside their own inner circle, who have been richly rewarded for this personality trait, and now they can afford Terminators. You can see how AI will destroy us because it’s already happening; it’s this, amplified, so that the next time someone wants to entrench some poverty, or kick a trillion-dollar bill to the next generation, a Terminator helps them do it.

With money we will get Terminators, Caesar said, and with Terminators we will get money; that’s how it happens. I’m not afraid of AI; AI will allow us to unlock wonders. But I’m afraid of your AI.

We Care a Lot

![]() You know what’s amazing: We can create things just by caring. That’s all you need to do. Just care. Two people care about each other: Pow! Now there’s a relationship. Before, nothing. But now anything might happen. They might move in together, quit jobs, travel, get in a fight.

You know what’s amazing: We can create things just by caring. That’s all you need to do. Just care. Two people care about each other: Pow! Now there’s a relationship. Before, nothing. But now anything might happen. They might move in together, quit jobs, travel, get in a fight.

It doesn’t just work on people. It can be anything. Look at all those sports teams who kick a ball or whatever and it’s televised and people flock to watch in giant stadiums. Just because we care! The kicking of the ball itself is pointless. That has no intrinsic value. It is clearly worthless. But we care about it! So actually it’s worth a lot! It’s driving economies and generating debate and making people wear scarves of particular colors.

TV shows. Religions. Novels. Everything! Everything in the world has value if someone cares about it! And only then!

This is a major background theme in Providence, by the way, which I have never seen anyone notice. I actually really want to talk about it sometime but can’t because I have to spoil the whole novel. Anyway, whenever I get to thinking that we’re all powerless motes in a maelstrom of external forces, and have no free will, I remember I can make something important by caring about it. And no-one can stop me! That’s the thing! I can care about whatever I like! Grass! Kids’ netball! Background themes in novels! You might think these things are stupid and worthless, but too late! I already cared about them! You know what that home-stitched doll of Marlene from Apathy and Other Small Victories is worth on eBay? Something! Because I like it!

Caring is amazing. As far as I can figure out, it’s the sole reason our existence is more than a bunch of physics: You can care about anything, at any time, for any reason. And when you do, you change the universe.

Nobody knows how this happens! We have no idea what makes someone care! We have only been able to persuade people to act like they care, which, okay, is pretty good, but not the same thing. (I once wrote 90% of a novel that I didn’t really care about. It was not the same.) Making people act like they care about things they actually don’t is a fundamental part of our world economy; just imagine if we couldn’t do that. I mean, you think there’s a staffing shortage now. Caring is so important, we pour unthinkable amounts of time and money into faking it.

Then there’s the other part. If you stop caring, you can kill things. Everything has a threshold, and when it receives less care than that, it dies. It just dies. And, again, you can do this in your head. You don’t need to make a plan. You don’t need to perform any particular deed. You can just stop caring. See how long that thing lasts.

The Earlickers of Twitch

![]() You can find people doing anything on the internet if you want, but you probably

don’t. We all find our boundaries, I think, beyond which we’re fine with not

knowing the details. But we know it’s out there—there’s nothing

we’d be surprised to hear you can find on the internet, because of

course you can.

You can find people doing anything on the internet if you want, but you probably

don’t. We all find our boundaries, I think, beyond which we’re fine with not

knowing the details. But we know it’s out there—there’s nothing

we’d be surprised to hear you can find on the internet, because of

course you can.

Still, I’d like to present an online service in which Amazon.com pays women to lick plastic ears.

An earlicker at work.

Some earlickers gentle and sweet, as if the plastic ears might be ticklish. Others you’d think are trying to extract the last bit of jam from a deep jar. Each earlicker has her own style. Most break up the earlicking with light conversation, but a few advertise NO TALK, if you prefer your earlickers just to focus on the ears, please.

It’s important to note that Amazon.com doesn’t want earlickers. Amazon did not, I’m pretty sure, set out to create an earlicking market, and it would probably like them to go away. Nor do the earlickers themselves particularly want to be earlicking—these aren’t earlickers from way back, who finally found a commercial platform to do what they love. Oh no. This is one of those situations that came about despite everyone’s best intentions.

At its core—right down in the canal, if you like—this is a language problem. The earlickers exist because it’s hard to say what you mean.

Amazon.com owns Twitch, which you might have heard of: It’s a streaming platform for

watching other people play video games rather than playing them yourself…

although that’s an old-school way of describing it, laced

with the same condescension with which my parents viewed us 80s & 90s

kids who’d do anything if it was on a screen.

Amazon.com owns Twitch, which you might have heard of: It’s a streaming platform for

watching other people play video games rather than playing them yourself…

although that’s an old-school way of describing it, laced

with the same condescension with which my parents viewed us 80s & 90s

kids who’d do anything if it was on a screen.

Amazon wants Twitch to keep doing what it’s doing: attract a mainstream audience where mainstream companies can advertise their mainstream products. But since anyone can become a Twitch streamer with a phone and some spare time, the site needs content rules. There’s no end of streamers to choose from, you see, and the audience skews young and male. It’s a viewers’ market, and the viewers quite like boobs.

So Twitch bans sexually suggestive content. See? It says it right here. No sexually suggestive content.

But that’s a bit vague, if you’re a streamer. If your income depends on staying on the right side of the rules, you want to know exactly where the lines are—whether you risk being deplatformed for doing a dance, for example, or going for a swim. Or licking plastic ears.

And Twitch—wanting to be transparent and helpful and not get pitchforked by a social media mob every time a popular streamer is or is not banned for crossing or not crossing the line—has obliged by writing policy docs to cover as many specific situations as possible. “Gestures directed towards breasts” are prohibited, for example, while “cleavage is unrestricted as long as coverage requirements are met.” (This is why streams are hosted by women with grand decolletage who don’t talk about it.)

You want details? Twitch has details. Twitch has precise rules for every scenario you can think of:

For streams dedicated to body art, full chest coverage is not required, but those who present as women must completely cover their nipples & areola with a layer of non-transparent clothing or a paint & latex combination (artist-grade pasties, tape, latex or similar alternatives are acceptable).

Or rather, almost every scenario. Because you can’t think of everything. Even if you cover everything that’s happening now, you can’t anticipate what people will come up with next.

The plastic ears with which the earlickers ply their trade are special microphones. They’re not cheap. You need to make a capital invesment to become an earlicker—which implies the existence of earlickers who sunk their savings into a 3Dio Free Space but never managed to made a living from it, and now the ears sit in a corner of their room, the lobes gathering dust, a symbol of regret.

But these microphones are the best (I assume) at capturing wet, intimate earlicking sounds, which, in the viewer’s headphones, create the auditory illusion that they are having their own ears licked. This experience can range from erotic to irritating, but it’s clearly, clearly sexually suggestive.

However, earlicking is not specifically mentioned in Twitch’s ruleset. And there’s a thin, artist-grade pasties veneer of credibility because earlicking is similar to ASMR, i.e. meditation via crinkly sounds. It’s difficult to find the words to express objectively how one is different from the other.

As someone who runs their own site of user-generated content, I’ve hit this paradox myself, where the more specific I make the site rules, the weirder behavior it seems to encourage. While the ruleset relies on broad, sweeping language—we may not be able to define it, but we know it when we see it—it’s relatively easy for site moderators to maintain consistent, common-sense standards. But the more specific and objective the wording becomes—which users want; they crave detail—the more bizarre corner cases pop up, which aren’t quite covered by the language, and which explode in popularity because now they’re the most boundary-pushing-yet-allowable examples of the type.

That’s how you get earlickers.

You can find the earlickers of Twitch here. (Warning: sexually suggestive.)

Messaging

You know, I think we’ve gone too far on this messaging thing. Not messaging as in sending each other messages. That’s fine. The more messages, the better. Messaging as in, How do I make an idea palatable to idiots.

Obviously messaging works. If you have an idea you need to get into people’s heads, you should think about messaging. People are busy. They pay no attention. When people hear an idea, they take one piece of it way out of context and form an opinion based on that, then refuse to change it until the end of time. You have more success if you tailor your message to be charming and digestible.

That’s fine. But I feel like we’ve begun to demand good messaging for everything, even when we’re not idiots. Now we think: If your messaging isn’t great, I’m out already. I’m not even going to entertain your idea, because your messaging sucks. It might be a good idea, but you couldn’t even get your messaging right, so forget it.

Maybe it’s a natural reaction to being bombarded by marketing all the time. Every day, sounds and and colors and movements try to catch our attention, most of which we manage to fend off. It’s wearying, so maybe it’s a relief to encounter some messy, confusing messaging that allows you to dismiss it right away, with no further brain-power required.

But this means abdicating responsibility to the messengers. It allows messaging, rather than the thing being messaged, to determine what we think about it. I don’t love the situation where we’re all so busy and distracted that there could be a, oh, I don’t know, a global pandemic and a free vaccine, and a valid argument against taking it would be, But the messaging was terrible.

8 comments

8 comments